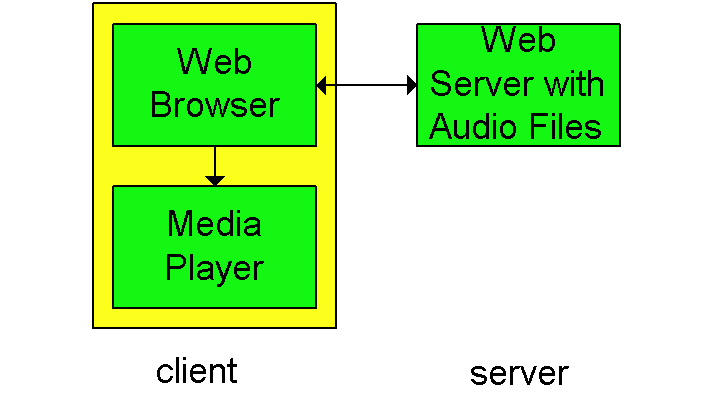

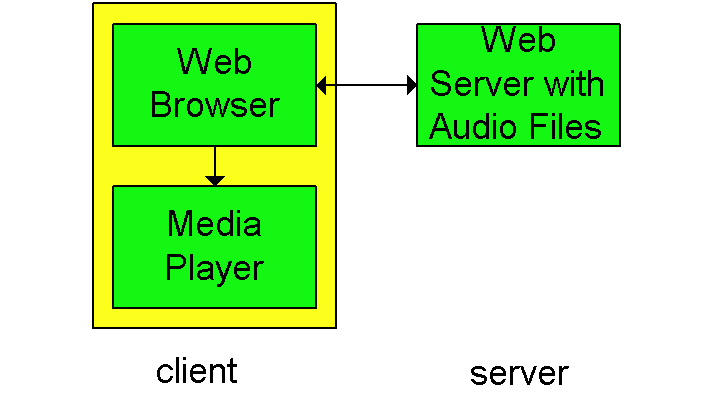

Figure 6.2-1 A naive implementation for audio streaming.

In audio/video streaming, clients request compressed audio/video files, which are resident on servers. As we shall discuss in this section, the servers can be "ordinary" Web servers, or can be special streaming servers tailored for the audio/video streaming application. The files on the servers can contain any type of audio/video content, including a professor's lectures, rock songs, movies, television shows, recorded sporting events, etc. Upon client request, the server directs an audio/video file to the client by sending the file into a socket. (Sockets are discussed in Sections 2.6-2.7.) Both TCP and UDP socket connections are used in practice. Before sending the audio/video file into the network, the file may is segmented, and the segments are typically encapsulated with special headers appropriate for audio/video traffic. Real-Time Protocol (RTP), discussed in Section 6.4, is a public-domain standard for encapsulating the segments. Once the client begins to receive the requested audio/video file, the client begins to render the file (typically) within a few seconds. Most of the existing products also provide for user interactivity, e.g., pause/resume and temporal jumps to the future and past of the audio/video file. User interactivity also requires a protocol for client/server interaction. Real Time Streaming Protocol (RTSP), discussed at the end of this section, is a public-domain protocol for providing user interactivity.

Audio/video streaming is often requested by users through a Web client

(i.e., browser). But because audio/video play out is not integrated directly

in today's Web clients, a separate helper application is required

for playing out the audio/video. The helper application is often called

a media player, the most popular of which are currently RealNetworks'

Real Players and the Microsoft

Windows Media Player. The media player performs several functions,

including:

Consider first the case of audio streaming. When an audio file resides on a Web server, the audio file is an ordinary object in the server's file system, just as are HTML and JPEG files. When a user wants to hear the audio file, its host establishes a TCP connection with the Web server and sends an HTTP request for the object (see Section 2.2); upon receiving such a request, the Web server bundles the audio file in an HTTP response message and sends the response message back into the TCP connection. The case of video can be a little more tricky, only because the audio and video parts of the "video" may be stored in two different files, that is, they may be two different objects in the Web server's file system. In this case, two separate HTTP requests are sent to the server (over two separate TCP connections for HTTP/1.0), and the audio and video files arrive at the client in parallel. It is up to the client to manage the synchronization of the two streams. It is also possible that the audio and video are interleaved in the same file, so that only one object has to be sent to the client. To keep the discussion simple, for the case of "video" we assume that the audio and video is contained in one file for the remainder of this section.

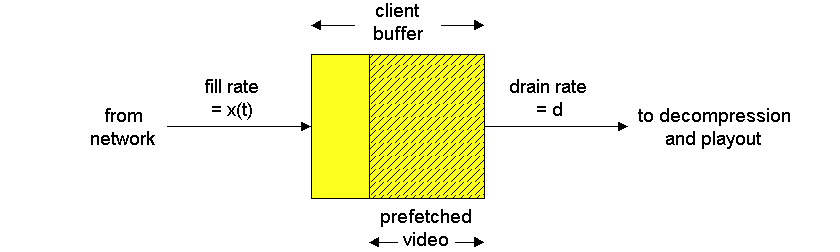

A naive architecture for audio/video streaming is shown in Figure 6.2.1. In this architecture:

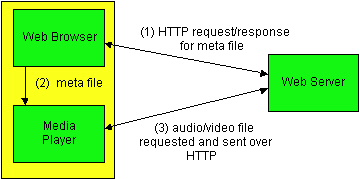

Although this approach is very simple, it has a major drawback: the media player (i.e., the helper application) must interact with the server through the intermediary of a Web browser. This can lead to many problems. In particular, when the browser is an intermediary, the entire object must be downloaded before the browser passes the object to a helper application; the resulting initial delay is typically unacceptable for audio/video clips of moderate length. For this reason, audio/video streaming implementations typically have the server send the audio/video file directly to the media player process. In other words, a direct socket connection is made between the server process and the media player process. As shown in Figure 6.2-2, this is typically done by making use of a meta file, which is a file that provides information (e.g., URL, type of encoding, etc.) about the audio/video file that is to be streamed.

A direct TCP connection between the server and the media player is obtained as follows:

We have have just learned how a meta file can allow a media player to dialogue directly with a Web server housing an audio/video. Yet many companies that sell products for audio/video streaming do not recommend the architecture we just described. This is because the architecture has the media player communicate with the server over HTTP and hence also over TCP. HTTP is often considered insufficiently rich to allow for satisfactory user interaction with the server; in particular, HTTP does not easily allow a user (through the media server) to send pause/resume, fast-forward and temporal jump commands to the server. TCP is often considered inappropriate for audio/video streaming, particularly when users are behind slow modem links. This is because, upon packet loss, the TCP sender rate almost comes to a halt, which can result in extended periods of time during which the media player is starved. Nevertheless, audio and video is often streamed from Web servers over TCP with satisfactory results.

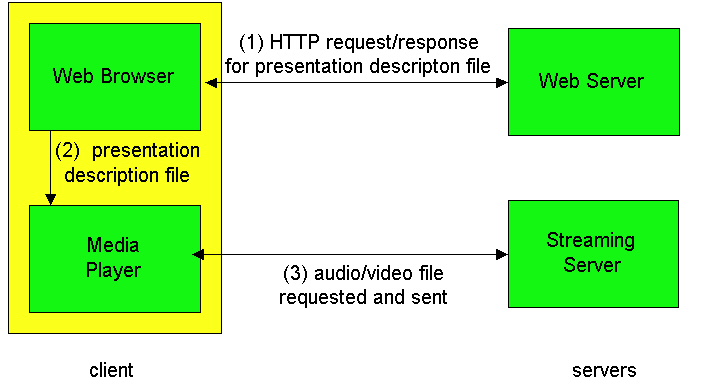

This architecture requires two servers, as shown in Figure 6.2-3. One server, the HTTP server, serves Web pages (including meta files). The second server, the streaming server, serves the audio/video files. The two servers can run on the same end system or on two distinct end systems. (If the Web server is very busy serving Web pages, it may be advantageous to put the streaming server on its own machine.) The steps for this architecture are similar to those described in the previous architecture. However, now the media player requests the file from a streaming server rather than from a Web server, and now the media player and streaming server can interact using their own protocols. These protocols can allow for rich user interaction with the audio/video stream. Furthermore, the audio/video file can be sent to the media player over UDP instead of TCP.

In the architecture of Figure 6.2-3, there are many options for delivering the audio/video from the streaming server to the media player. A partial list of the options is given below:

But before getting into the details of RTSP, let us indicate what RTSP does not do:

Recall from Section 2.3 that File Transfer Protocol (FTP) also uses the out-of-band notion. In particular, FTP uses two client/server pairs of sockets, each pair with its own port number: one client/server socket pair supports a TCP connection that transports control information; the other client/server socket pair supports a TCP connection that actually transports the file. The control TCP connection is the so-called out-of-band channel whereas the TCP connection that transports the file is the so-called data channel. The out-of-band channel is used for sending remote directory changes, remote file deletion, remote file renaming, file download requests, etc. The inband channel transports the file itself. The RTSP channel is in many ways similar to FTP's control channel.

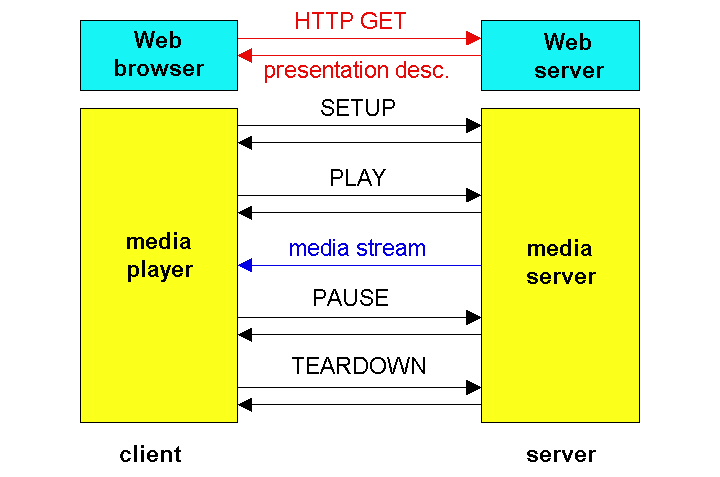

Let us now walk through a simple RTSP example, which is illustrated in Figure 6.2-5. The Web browser first requests a presentation description file from a Web server. The presentation description file can have references to several continous-media files as well as directives for syncrhonization of the continuous-media files. Each reference to a continuous-media file begins with the the URL method, rtsp:// . Below we provide a sample presentation file, which has been adapted from the paper [Schulzrinne]. In this presentation, an audio and video stream are played in parallel and in lipsync (as part of the same "group"). For the audio stream, the media player can choose ("switch") among two audio recordings, a low fidelity recording and a hi fidelity recording.

<title>Twister</title>

<session>

<group language=en lipsync>

<switch>

<track type=audio

e="PCMU/8000/1"

src = "rtsp://audio.example.com/twister/audio.en/lofi">

<track type=audio

e="DVI4/16000/2" pt="90 DVI4/8000/1"

src="rtsp://audio.example.com/twister/audio.en/hifi">

</switch>

<track type="video/jpeg"

src="rtsp://video.example.com/twister/video">

</group>

</session>

The Web server encapsulates the presentation description file in an HTTP response message and sends the message to the browser. When the browser receives the HTTP response message, the browser invokes a media player (i.e., the helper application) based on the content-type: field of the message. The presentation description file includes references to media streams, using the URL method rtsp:// , as shown in the above sample. As shown in Figure 6.2-4, the player and the server then send each other a series of RTSP messages. The player sends an RTSP SETUP request, and the server sends an RTSP SETUP response. The player sends an RTSP PLAY request, say, for lofi auido, and server sends RTSP PLAY response. At this point, the streaming server pumps the lofi audio into its own in-band channel. Later, the media player sends an RTSP PAUSE request, and the server responds with an RTSP PAUSE response. When the user is finished, the media player sends an RTSP TEARDOWN request, and the server responds with an RTSP TEARDOWN response.

Each RTSP session has a session identifier, which is chosen by the server.

The client initiates the session with the SETUP request, and the server

responds to the request with an identifier. The client repeats the session

identifier for each request, until the client closes the session with the

TEARDOWN request. The following is a simplified example of an RTSP session:

S: RTSP/1.0 200 1 OK

Session 4231

C: PLAY rtsp://audio.example.com/twister/audio.en/lofi RTSP/1.0

Session: 4231

Range: npt=0-

C: PAUSE rtsp://audio.example.com/twister/audio.en/lofi RTSP/1.0

Session: 4231

Range: npt=37

C: TEARDOWN rtsp://audio.example.com/twister/audio.en/lofi RTSP/1.0

Session: 4231

S: 200 3 OK

References

[Schulzrinne] H. Schulzrinne, "A Comprehensive Multimedia Control Architectuure for the Internet," NOSSDAV'97 (Network and Operating System Support for Digital Audio and Video), St. Louis, Missouri; May 19, 1997. Online version available.

[RealNetworks] RTSP Resource Center, http://www.real.com/devzone/library/fireprot/rtsp/

[RFC 2326] H. Schulzrinne, A. Rao, R.

Lanphier, "Real Time Streaming Protocol (RTSP)", RFC

2326, April 1998.